The robotic dog races forwards, metallic jaws stretched wide, sharp teeth glinting in the fluorescent light. Then it turns into a balloon.

Although this sounds like a magic trick, or a scene from a strange nightmare, it is actually a sequence from Awakening, a video game that can be controlled with your mind.

Instead of holding a controller and pressing buttons to manipulate objects and move around in the game, players sit in a chair with a virtual-reality headset strapped on, and nothing in their hands. This might sound like a gimmick but this kind of technology could change the way we interact with the world, as Ramses Alcaide explained in a talk at the South by Southwest (SXSW) conference in Austin, Texas in March.

Alcaide, an electrical engineer and neuroscientist and the chief executive of Neurable, demonstrated the technology to an excited audience as his head of marketing Adam Molnar played the game. The driving force behind Neurable is what entrepreneur Elon Musk calls the human body’s “bandwidth problem”.

Our smartphones have given us fast, portable access to a vast range of human knowledge, and we already use the internet as an external storage device. But the way we interact with it is via two incredibly blunt tools: our thumbs. “We’re all cyborgs, we’re just low-efficiency cyborgs,” said Musk – who has his own company, Neuralink, aimed at tackling the problem – in an over-subscribed Q&A session at SXSW.

Engineers all over the planet are attempting to design and build human-machine interfaces to connect our brains directly to our devices. Some, like the team at Neurable, are focused on wearable devices that can read our intentions from our brain waves.

Others are digging into the cortex itself, leaning on advances in materials science to create chips to be implanted into the brain. These devices are already changing the lives of patients with brain damage and disorders, and in the future they could allow us to transmit complex thoughts to our devices.

Eventually human-machine interfaces will bring us closer to our technology. They’ll connect us to our smartphones and wearables, and change the way we interact with the world – for better or worse.

“As we move towards augmented and virtual reality, we need to look at the next step forward in human interaction,” said Alcaide, before his colleague performed digital magic with his mind. “We need to connect our brains directly to the world.”

Brain waves in control

Neurable’s technology uses a plastic headset with VR goggles at the front, and nine sets of electrodes at the back. It’s a slick version of a technology that has been used in psychology labs and hospitals for decades – an electroencephalogram, or EEG.

Traditional EEG is a messy affair – you put on a piece of tight-fitting headgear like a swimming cap, and then gel is injected into holes to link electrodes to the scalp, while trailing wires tether you to a computer which picks up the signals. It’s awkward, unreliable, and you need to wash your hair afterwards.

Neurable’s headset looks much more modern but, as Alcaide explains, the real innovation is in the software. The company has used artificial intelligence and machine learning to make sense of the data that comes spooling out of the measuring equipment – to the untrained eye it looks like the jittery line of a seismograph during an earthquake.

But, after some training, it’s these brain waves that allow players to project their intentions into the game. Simply by wanting something to happen, they create a specific pattern of brain activation that is picked up by Neurable’s software and translated into that movement in the game. At the moment it’s fairly crude. While playing the game, Molnar wasn’t thinking “turn that dog into a balloon” specifically, but he was focusing attention on the robot animal and the system was able to detect that. However, as Alcaide admits, there’s still a long way to go before EEG can be translated into something we can use for all our devices.

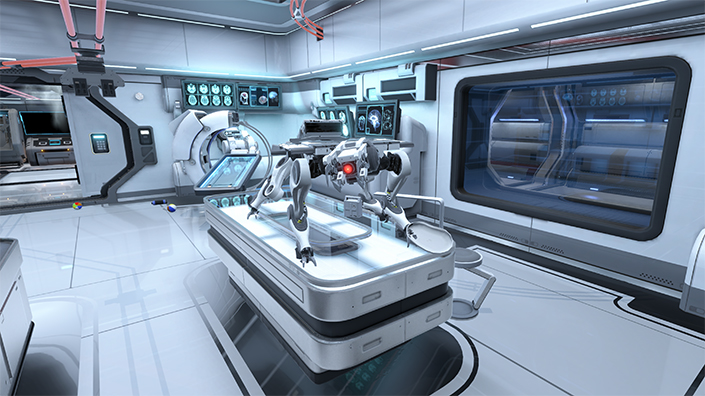

A robotic dog in Awakening, moments before being transformed into a balloon (Credit: Neurable)

One problem is noise. The electrodes don’t penetrate the scalp, they merely sit on top of it, so movements of the body or facial muscles can drown out the faint signals from the brain. It’s hoped that, by using artificial intelligence, software will be able to mitigate some of these problems.

The second barrier to widespread adoption is the hardware. As Google found out with Glass, people aren’t yet ready to wear augmented-reality smart glasses with a conspicuous camera, so they’ll be even more reluctant to use a full headset that at the moment still needs to be plugged into a computer. However, Alcaide says Neurable is working on miniaturising the technology, and predicts that it could soon be fitted into something that can be worn in or above the ear like headphones.

Alcaide says his company’s technology could be used to control AR devices with our minds – bringing up directions, or allowing us to select from multiple objects within our visual field. It could be used to enter search queries, but it’s a slow process. Molnar demonstrated how the EEG headset could be used to type, but at the moment it is done by focusing on each letter in sequence. It’s slow.

Thoughts turn into words

At Massachusetts Institute of Technology’s Media Lab, another wearable device could tackle that problem. Instead of sitting on the head, this white plastic contraption fits over the ear and then traces the line of the wearer’s jaw down to the chin.

It’s designed to measure signals from the muscles of the face and mouth, and to take advantage of a strange quirk of the way our bodies are wired. When you imagine saying a word or performing an action, your brain sends signals to the muscles that would be involved in that movement, even if you don’t actually go on to do it.

The device detects those signals, and can transcribe people’s internal dialogue with an amazing 92% accuracy. It also features bone conduction earphones, which allow people to listen to any responses while still leaving their ears clear to take in the real world.

“The motivation for this was to build an intelligence-augmentation device,” says Arnav Kapur, a graduate student at the MIT Media Lab, who led the development of the system. “Our idea was: could we have a computing platform that’s more internal, that melds human and machine in some ways and that feels like an internal extension of our own cognition?”

The goal was to reduce the intrusiveness of our smartphones. Kapur’s thesis adviser, MIT professor Pattie Maes, says: “If I want to look something up that’s relevant to a conversation I’m having, I have to find my phone and type in the passcode and open an app and type in some search keyword, and the whole thing requires that I shift attention from my environment and the people that I’m with to the phone.”

AlterEgo detects subtle movements of the face to let users silently speak to devices (Credit: Lorrie Lejeune/ MIT)

The device, called AlterEgo, would allow users to ask questions of their personal voice assistants without anyone else hearing. It could be useful for very noisy places, such as airports and factories, or situations where silence is key, like military special operations.

However, although it’s impressive, this kind of system is not well suited to controlling augmented-reality devices or physical devices such as robotic arms or exoskeletons. It would be like driving a car using Alexa – slow and not suited to complex instructions.

EEG suffers from the same problem owing to its poor spatial resolution – it’s not very good at telling where in the head a particular pattern of electrical activity originated. If we’re really going to take the next step and start merging with technology, we need something even better. For that, engineers need to get closer to the brain.

Brain implants change lives

On YouTube, you can watch a miracle. Andrew ‘AJ’ Johnson, a New Zealander with early-onset Parkinson’s disease, addresses the camera. His hands shake with the characteristic tremors of the condition, and he has difficulty getting the words out, as neurons in his brain that control his fine motor movements sputter and misfire. It is difficult to watch. But then he flicks a switch, and all the symptoms disappear.

Johnson was just 35 when he was diagnosed in 2009, and his wife was pregnant with their daughter. Two years later, his symptoms became so severe that he had to give up his job in banking. He was, as he subsequently wrote on his blog Young and Shaky, “a 39-year-old trapped in an 89-year-old body”. But in November 2012 and February 2013, he underwent two surgical procedures that changed his life.

In the first, neurosurgeons drilled holes into Johnson’s skull and inserted very fine wires into a target area of the brain. Three months later, they cut a ‘pocket’ into his chest, under his collarbone, where they inserted an electrical device called a neurostimulator.

Like a pacemaker, this box delivers a steady pulse of electricity through the wires in Johnson’s skull – 2.9V per second to each hemisphere of his brain in a treatment known as deep-brain stimulation. “DBS is like a giant Band Aid,” says Johnson. “It doesn’t cure the disease, nor does it stop its progression.” But it does provide temporary relief.

AJ Johnson has a brain implant that stops the tremors from Parkinson’s disease (Credit: Andrew Johnson/ YouTube)

At the Global Grand Challenges Summit in Washington last year, Johnson’s video – entitled The effects of DBS on the motor symptoms of Parkinson’s disease – got a round of applause from the audience. It was shown during a talk on ‘reverse-engineering the brain’ by Rikky Muller, an assistant professor of electrical engineering at the University of California, Berkeley, and the co-founder of Cortera Neurotechnologies.

Muller also showed a video of a paralysed patient controlling a robotic arm using her brain. Thanks to ‘intra-cortical recording’ – electrodes inserted directly into the patient’s neurons – she was able to direct the arm to pick up a drink and raise it to her lips.

But these devices and implants have problems, including scarring and a degrading of the neural signal over a few months. “The device that connects to her brain pierces into her cortex, tunnels wires to her skull, and keeps her tethered to a computer,” said Muller. “There’s nothing smart about these sensors.”

At Cortera, Muller and her colleagues are working on miniaturising this technology, making it wireless and less invasive. “Our vision is to create devices that are so small, safe and minimally invasive that they can be implanted in the patient for their lifetime,” she said.

Instead of taking measurements from outside the head, as in EEG, or from within the neurons as in intra-cortical recording, Cortera is developing sensors that can be surgically implanted on top of the brain. These electrocorticography, or ECoG, systems are currently bulky but, by using flexible, biocompatible polymers, Muller hopes to create tiny chips and antennas that can wirelessly collect power and integrate seamlessly with the brain.

At first, these devices will be used to monitor the brains of patients during surgery or to treat conditions such as Parkinson’s. After that, they will be used to tackle mental illness and give paralysed patients a voice. Eventually, they could wire our brains directly to the internet, and change what it means to be human.

Content published by Professional Engineering does not necessarily represent the views of the Institution of Mechanical Engineers.