Exploring without a compass is reckless – without a map, even worse. Yet that is exactly what one new explorer did last year, zipping through the woods of Hürstwiese state park near Zurich at up to 40km/h.

The explorer in question was a drone, equipped only with cameras and an onboard computer – no lidar, radar or other sensors. The environment was entirely unknown to it, yet it managed to chart a course through the wood, dodging and ducking around trunks, under branches, and over the uneven ground.

The feat was made possible by a new approach to unpiloted flight, developed at the University of Zurich. Involving neural networks and ‘simulated experts’, the technique shows a promising direction for future autonomous flight systems. It could also transform robotic systems used for everything from grocery delivery to extra-terrestrial exploration.

On the edge

Research leader Professor Davide Scaramuzza is not new to advanced autonomous flight. As head of the Robotics and Perception Group at Zurich, the professor’s previous work includes an algorithm-piloted drone that can beat expert human pilots around a course, and a navigation algorithm that enables acrobatic manoeuvres using nothing more than onboard sensor measurements.

In the latest project, Scaramuzza and his team set out to overcome a frustrating limitation for drone operation. Unmanned aerial vehicles (UAVs) are hard to beat for exploring complex environments – they are fast, agile and small, and can carry sensors and payloads virtually anywhere. But they struggle to navigate through unknown environments without a map, so human pilots are currently needed to realise their full potential.

Trying to overcome that challenge is far from simple. Flying without a data connection to a ground-based computer means all navigation decisions must be made onboard, says researcher Elia Kaufmann, co-author of a paper on the project. Algorithms must be made robust to any possible failures, but he says it is very difficult to capture all possible ‘edge cases’ – problems that occur at extreme operating parameters.

Human pilots can handle edge cases because of an ability to generalise, meaning they still perform well even if they see data that has not appeared during training. The Zurich researchers therefore set out to enable generalisation within their system, which could be an important step towards artificial intelligence (AI) pilots outperforming humans in a huge range of applications.

Simulated expert

From the outside, the drone used in the research looks much like any other – a racing quadrotor with a carbon frame, standard motors, and propellers.

“Additionally, our drone is equipped with special cameras, which are used to perform visual inertial odometry for state estimation, such that the drone knows where it is – more or less – in space, as well as perception of the environment using a depth camera,” says Kaufmann. There are four lenses in total, with stereo images provided by both the depth camera and the odometry camera, which includes inertial measurement units that measure angular velocity and acceleration.

The real advances are under the hood. The craft has a powerful mini-computer with an integrated graphics processing unit (GPU), which enables an onboard neural network. It is this that uses the depth images to decide where to fly.

While an onboard neural network is unusual in itself, the most important innovation comes in how it is trained. It learns to fly by observing a ‘simulated expert’, which only flies in the virtual world. Also known as a privileged expert, the program has complete information on the environment, the state of its computer-generated drone, and readings from virtual sensors. It can rely on enough time and computational power to always find the best trajectory – a far cry from the physical drone, which needs to make split-second decisions in unknown environments.

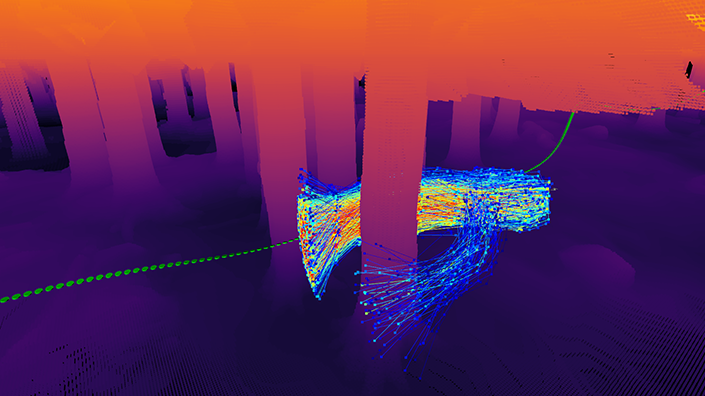

The simulated expert calculates potential routes through the virtual environment

The virtual testing environment is “the perfect situation to deploy traditional planning and control algorithms,” says Kaufmann. They “make exactly this assumption, that you know what your environment looks like and that you know where your platform is”.

The simulated expert uses its millimetre-resolution knowledge of the obstacles and the state of the drone alongside a basic pre-set route to compute a set of trajectories with Monte Carlo sampling, which uses repeated random samples to obtain results. The technique then identifies the optimal route.

“If the trajectory collides with something, this is really not good, and this trajectory for sure will not be selected,” says Kaufmann. “There are also other ‘costs’, such as smoothness and progress towards the goal… this is a very costly and slow process, but we can do this in simulation.”

He adds: “In the real world, we do not have this perfect information and we do not have the infinite compute. The ‘magic ingredient’, which basically allows us to deploy this to a real system, is by training a neural network.”

The neural network is trained by observing thousands of examples of what to do when confronted by a certain pattern of obstacles. It learns how to adapt its flight, and imitates the expert as it navigates the real world using only the depth images from the stereo cameras.

Agile advantage

The Zurich team’s approach has a major advantage over previous systems – speed. Other autonomous pilots, which can be found on some commercial drones, first use sensor data to create a map of the environment and then plan trajectories within that map.

“If you fly at high speed through the forest, and if you don’t want to crash, then you need two things,” says Kaufmann. “You need to understand where you can fly, and where you cannot. If it takes five seconds for you to understand that something is occupied, it’s already too late.”

The team’s learning-based technique achieves both goals, however. The GPU has very low latency – 20 milliseconds, roughly 10 times quicker than expert human pilots – and the neural network understands the environment well, thanks to its training.

The approach is aimed at maximising the area that can be covered during the limited flight times afforded by typical drone batteries.

“A multicopter, like a quadcopter or a hexacopter, only lasts for almost 30 minutes,” says Scaramuzza. “The only alternative solution to fly them for longer is to make them fly ‘agilely’. They will still fly for 30 minutes – but by flying more agilely, faster, they will be able to cover longer distances.” The boost to agility could allow search-and-rescue drones to explore more of a collapsed building before the battery runs out, for example.

The simulated expert approach could also prepare drones for a wide range of conditions. According to the research leader, learning from one situation can be extrapolated to others – a neural network that has observed an expert in a forest-like environment could fly through a city, for example. This was not tested in the real world, however.

“Testing in the real world was done in the wild, in snowy environments and forest,” says Scaramuzza. “We wanted to do it also in urban environments but, given that the law doesn’t allow it, we did it in simulated urban environments.”

This could mean that potential issues have not yet been revealed, according to drone expert David Hambling, author of Swarm Troopers: How Small Drones will Conquer the World.

“There is a big difference with urban environments, as far as drones are concerned,” he says. “You get these very distinctive ‘canyon winds’ that blow down urban environments. That’s the kind of thing you would really want your physics to be simulating, because I don’t believe that their forest environment would be simulating the effects of wind.”

Taking flight

Despite the challenges presented by different environments, the simulated expert approach means it should be straightforward to train neural networks on representative simulations for a variety of situations.

The Zurich team believes the method could be used on drones suited for a huge range of applications. The Swiss National Science Foundation is funding drone search-and-rescue research, for example, while a European project is investigating autonomous inspection of power lines, during which drones need to avoid growing vegetation. Others hope the system could be used for agricultural monitoring.

Security applications are another obvious possibility. Local city police have already spoken to the researchers about the system’s potential to tackle incidents such as the drone sightings that cancelled 1,000 flights at Gatwick Airport in 2018, or the malicious use of drones with onboard explosives. The police service is exploring the use of a human-piloted interceptor drone that fires nets at the attacker, but Scaramuzza believes an AI pilot could be even better.

He claims that autonomous systems could also come out on top in drone races, which he describes as “a great proxy for benchmarking the state of the art in autonomous navigation – the perception, learning and control”. Putting the systems on identical drones provides a common ground, allowing easy evaluation of what works best.

As with any drones project, there will inevitably be discussion of potential military applications, both from those keen to put them to use, and those who believe autonomy should be kept out of human conflict. The US Defense Advanced Research Projects Agency already has a project investigating fast flight in an unfamiliar environment, says Hambling, with some “very nifty” drone manoeuvring. “The military are already all over this, you can be assured of that,” he adds.

Martian exploration

While the system used in last year’s Zurich demonstration was specifically designed for drone flight, the underlying process – creating a simulated expert, then using it to train a neural network – could have much wider application.

It remains to be seen if flying taxis will ever reach the paradigm-shifting potential that developers claim, but the Zurich approach could make journeys smoother and quicker. Most prototypes take off vertically and reach cruising altitude before flying point-to-point horizontally, based on GPS. A simulated expert and neural network system could create more organic and efficient flight paths – “more like the DeLorean in Back to the Future,” says Scaramuzza.

The same might be true for autonomous cars, which can already be trained in virtual environments, but the researchers also have much more ambitious ideas. They submitted a paper to the NASA Jet Propulsion Laboratory in California about investigating ‘collaborative exploration scenarios’ on Mars. The researchers believe they could use machine learning to reduce the computation power required for localisation of multiple rotorcraft – like the Ingenuity helicopter currently making flights above the Martian surface – by comparing viewpoints from each craft.

Similar systems could also have much more mundane applications, says Hambling, such as logistics robots that need to pack and unpack stacks of unfamiliar objects.

“There is potentially a gigantic market for robot carers,” he adds. “This is the type of real-world environment that’s very difficult for robotics… the kind of thing where lots of learning and lots of data might be really useful.”

For now, the system is just installed on a drone, and the researchers are keen to improve it further. Reinforcement learning, a training method that rewards good actions and punishes bad ones, could allow UAV-based neural networks to improve from experience, helping to boost lap times or aid collaboration within a swarm.

The researchers are also exploring the addition of event cameras, which they have previously used to allow drones to dodge thrown objects. Each pixel on an event camera is independent of all the others, only streaming information when it detects motion. This enables considerably higher frame rates – 1m frames per second (fps), compared to 200 fps with current cameras. Adding such a camera to a simulation expert-trained drone could allow the craft to avoid thrown objects, debris from crumbling buildings, and birds.

Future iterations will still not use compass directions or a map – but the researchers nonetheless have a clear direction of travel.

Want the best engineering stories delivered straight to your inbox? The Professional Engineering newsletter gives you vital updates on the most cutting-edge engineering and exciting new job opportunities. To sign up, click here.

Content published by Professional Engineering does not necessarily represent the views of the Institution of Mechanical Engineers.